How To Run A Python Job#

Introduction#

All members of a project in Hopsworks can launch the following types of applications through a project's Jobs service:

- Python (Hopsworks Enterprise only)

- Apache Spark

Launching a job of any type is very similar process, what mostly differs between job types is the various configuration parameters each job type comes with. Hopsworks support scheduling jobs to run on a regular basis, e.g backfilling a Feature Group by running your feature engineering pipeline nightly. Scheduling can be done both through the UI and the python API, checkout our Scheduling guide.

Kubernetes integration required

Python Jobs are only available if Hopsworks has been integrated with a Kubernetes cluster.

Hopsworks can be integrated with Amazon EKS, Azure AKS and on-premise Kubernetes clusters.

UI#

Step 1: Jobs overview#

The image below shows the Jobs overview page in Hopsworks and is accessed by clicking Jobs in the sidebar.

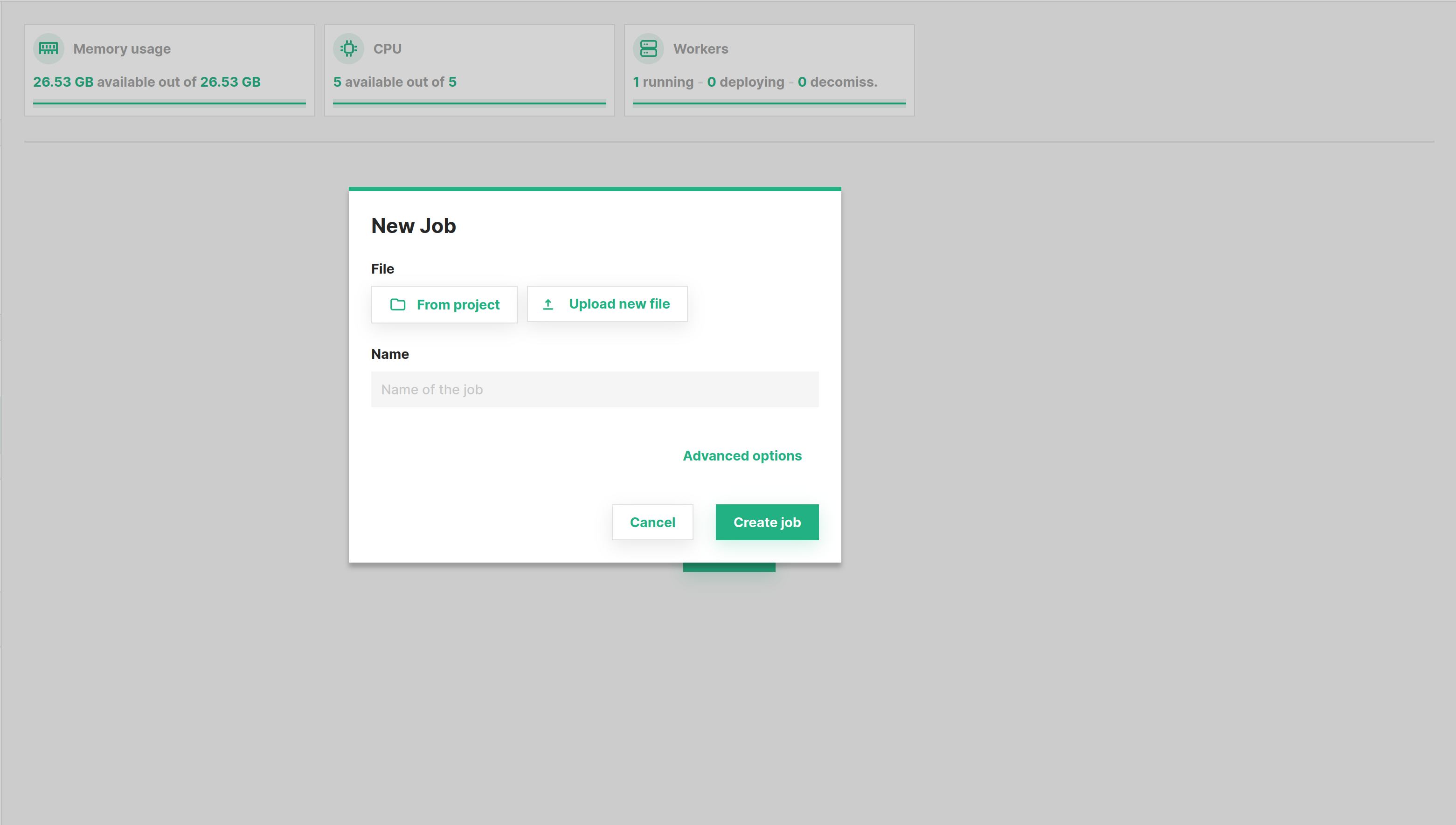

Step 2: Create new job dialog#

By default, the dialog will create a Spark job. To instead configure a Python job, click Advanced options, which will open up the advanced configuration page for the job.

Step 3: Set the script#

Next step is to select the python script to run. You can either select From project, if the file was previously uploaded to Hopsworks, or Upload new file which lets you select a file from your local filesystem as demonstrated below.

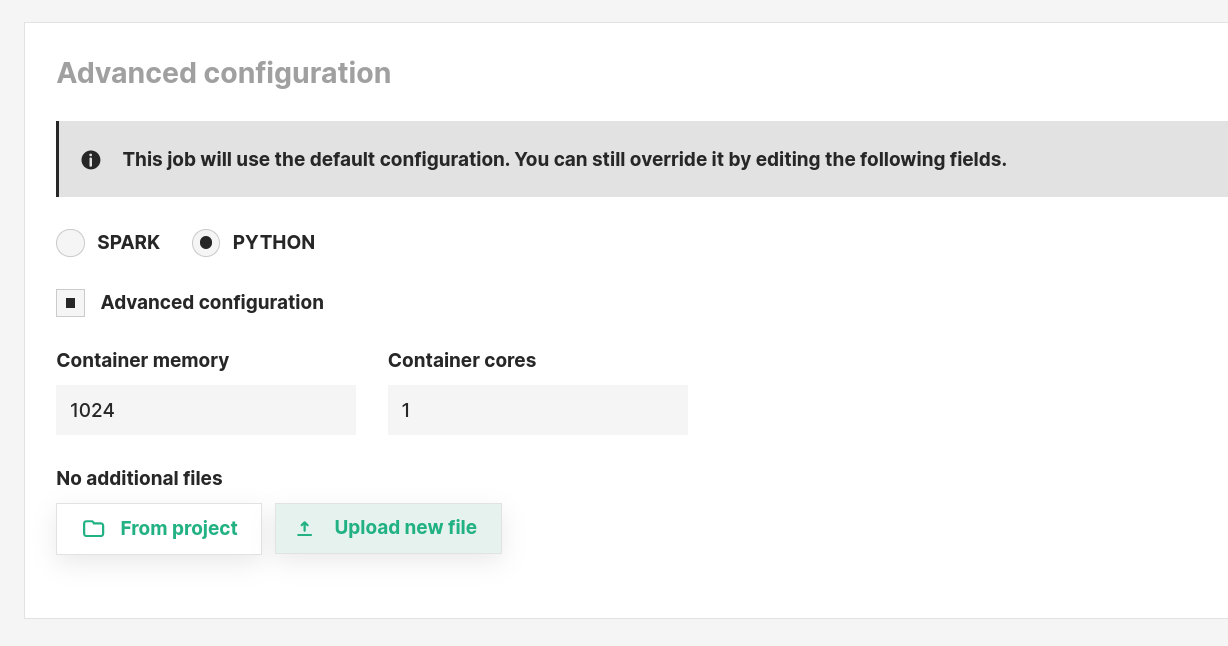

Step 4: Set the job type#

Next step is to set the job type to PYTHON to indicate it should be executed as a simple python script. Then click Create New Job to create the job.

Step 5 (optional): Additional configuration#

It is possible to also set following configuration settings for a PYTHON job.

Container memory: The amount of memory in MB to be allocated to the Python scriptContainer cores: The number of cores to be allocated for the Python scriptAdditional files: List of files that will be locally accessible by the application

Step 6: Execute the job#

Now click the Run button to start the execution of the job, and then click on Executions to see the list of all executions.

Once the execution is finished, click on Logs to see the logs for the execution.

Code#

Step 1: Upload the Python script#

This snippet assumes the python script is in the current working directory and named script.py.

It will upload the python script to the Resources dataset in your project.

import hopsworks

project = hopsworks.login()

dataset_api = project.get_dataset_api()

uploaded_file_path = dataset_api.upload("script.py", "Resources")

Step 2: Create Python job#

In this snippet we get the JobsApi object to get the default job configuration for a PYTHON job, set the python script to run and create the Job object.

jobs_api = project.get_jobs_api()

py_job_config = jobs_api.get_configuration("PYTHON")

py_job_config['appPath'] = uploaded_file_path

job = jobs_api.create_job("py_job", py_job_config)

Step 3: Execute the job#

In this snippet we execute the job synchronously, that is wait until it reaches a terminal state, and then download and print the logs.

# Run the job

execution = job.run(await_termination=True)

# Download logs

out, err = execution.download_logs()

f_out = open(out, "r")

print(f_out.read())

f_err = open(err, "r")

print(f_err.read())

API Reference#

Conclusion#

In this guide you learned how to create and run a Python job.