Project/Connection#

In Hopsworks a Project is a sandboxed set of users, data, and programs (where data can be shared in a controlled manner between projects).

Each Project can have its own Feature Store. However, it is possible to share Feature Stores among projects.

When working with the Feature Store from a programming environment you can connect to a single Hopsworks instance at a time, but it is possible to access multiple Feature Stores simultaneously.

A connection to a Hopsworks instance is represented by a Connection object. Its main purpose is to retrieve the API Key if you are connecting from an external environment and subsequently to retrieve the needed certificates to communicate with the Feature Store services.

The handle can then be used to retrieve a reference to the Feature Store you want to operate on.

Examples#

Connecting from Hopsworks

import hsfs

conn = hsfs.connection()

fs = conn.get_feature_store()

Connecting from Databricks

In order to connect from Databricks, follow the integration guide.

You can then simply connect by using your chosen way of retrieving the API Key:

import hsfs

conn = hsfs.connection(

host="ec2-13-53-124-128.eu-north-1.compute.amazonaws.com",

project="demo_fs_admin000",

hostname_verification=False,

secrets_store="secretsmanager"

)

fs = conn.get_feature_store()

Alternatively you can pass the API Key as a file or directly:

Azure

Use this method when working with Hopsworks on Azure.

import hsfs

conn = hsfs.connection(

host="ec2-13-53-124-128.eu-north-1.compute.amazonaws.com",

project="demo_fs_admin000",

hostname_verification=False,

api_key_file="featurestore.key"

)

fs = conn.get_feature_store()

Connecting from AWS SageMaker

In order to connect from SageMaker, follow the integration guide to setup the API Key.

You can then simply connect by using your chosen way of retrieving the API Key:

import hsfs

conn = hsfs.connection(

host="ec2-13-53-124-128.eu-north-1.compute.amazonaws.com",

project="demo_fs_admin000",

hostname_verification=False,

secrets_store="secretsmanager"

)

fs = conn.get_feature_store()

Alternatively you can pass the API Key as a file or directly:

import hsfs

conn = hsfs.connection(

host="ec2-13-53-124-128.eu-north-1.compute.amazonaws.com",

project="demo_fs_admin000",

hostname_verification=False,

api_key_file="featurestore.key"

)

fs = conn.get_feature_store()

Connecting from Python environment

To connect from a simple Python environment, you can provide the API Key as a file as shown in the SageMaker example above, or you provide the value directly:

import hsfs

conn = hsfs.connection(

host="ec2-13-53-124-128.eu-north-1.compute.amazonaws.com",

project="demo_fs_admin000",

hostname_verification=False,

api_key_value=(

"PFcy3dZ6wLXYglRd.ydcdq5jH878IdG7xlL9lHVqrS8v3sBUqQgyR4xbpUgDnB5ZpYro6O"

"xNnAzJ7RV6H"

)

)

fs = conn.get_feature_store()

Connecting from Hopsworks

import com.logicalclocks.hsfs._

val connection = HopsworksConnection.builder().build();

val fs = connection.getFeatureStore();

Connecting from Databricks

TBD

Connecting from AWS SageMaker

The Scala client version of hsfs is not supported on AWS SageMaker,

please use the Python client.

Sharing a Feature Store#

Connections are on a project-level, however, it is possible to share feature stores among projects, so even if you have a connection to one project, you can retireve a handle to any feature store shared with that project.

To share a feature store, you can follow these steps:

Sharing a Feature Store

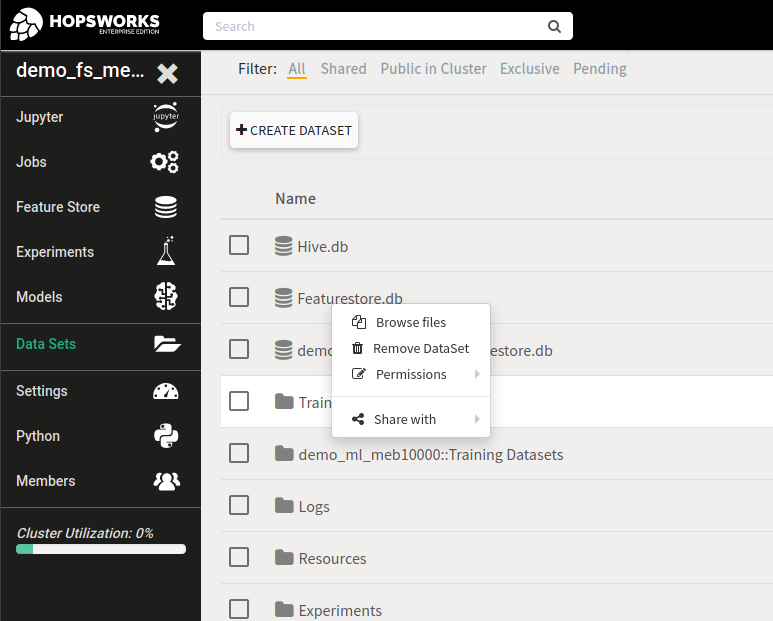

- Open the project of the feature store that you would like to share on Hopsworks.

- Go to the Data Set browser and right click the

Featurestore.dbentry. - Click Share with, then select Project and choose the project you wish to share the feature store with.

- Select the permissions level that the project user members should have on the feature store and click Share.

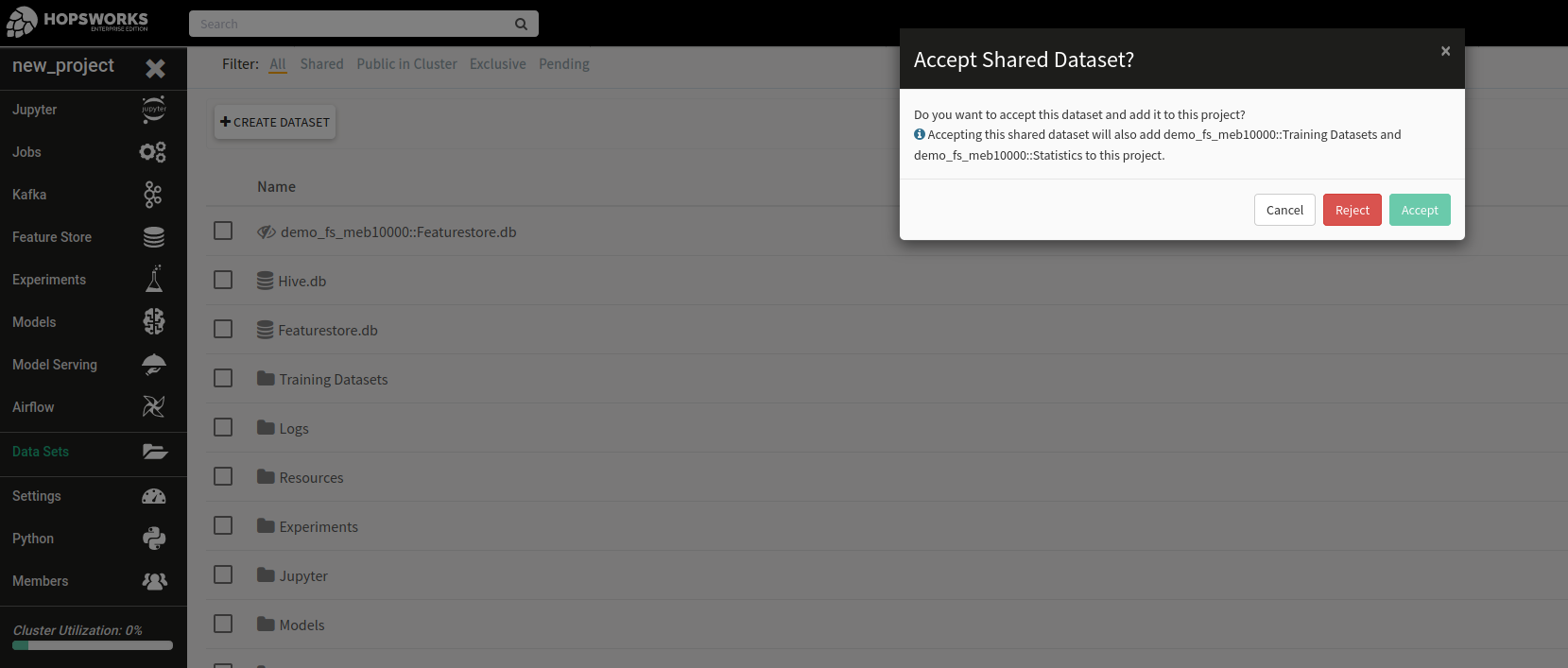

- Open the project you just shared the feature store with.

- Go to the Data Sets browser and there you should see the shared feature store as

[project_name_of_shared_feature_store]::Featurestore.db. Click this entry, you will be asked to accept this shared Dataset, click Accept. - You should now have access to this feature store from the other project.

Connection Handle#

Connection#

hsfs.connection.Connection(

host=None,

port=443,

project=None,

engine=None,

region_name="default",

secrets_store="parameterstore",

hostname_verification=True,

trust_store_path=None,

cert_folder="hops",

api_key_file=None,

api_key_value=None,

)

A feature store connection object.

The connection is project specific, so you can access the project's own feature store but also any feature store which has been shared with the project you connect to.

This class provides convenience classmethods accessible from the hsfs-module:

Connection factory

For convenience, hsfs provides a factory method, accessible from the top level

module, so you don't have to import the Connection class manually:

import hsfs

conn = hsfs.connection()

Save API Key as File

To get started quickly, without saving the Hopsworks API in a secret storage, you can simply create a file with the previously created Hopsworks API Key and place it on the environment from which you wish to connect to the Hopsworks Feature Store.

You can then connect by simply passing the path to the key file when instantiating a connection:

import hsfs

conn = hsfs.connection(

'my_instance', # DNS of your Feature Store instance

443, # Port to reach your Hopsworks instance, defaults to 443

'my_project', # Name of your Hopsworks Feature Store project

api_key_file='featurestore.key', # The file containing the API key generated above

hostname_verification=True) # Disable for self-signed certificates

)

fs = conn.get_feature_store() # Get the project's default feature store

Clients in external clusters need to connect to the Hopsworks Feature Store using an API key. The API key is generated inside the Hopsworks platform, and requires at least the "project" and "featurestore" scopes to be able to access a feature store. For more information, see the integration guides.

Arguments

- host

Optional[str]: The hostname of the Hopsworks instance, defaults toNone. - port

int: The port on which the Hopsworks instance can be reached, defaults to443. - project

Optional[str]: The name of the project to connect to. When running on Hopsworks, this defaults to the project from where the client is run from. Defaults toNone. - engine

Optional[str]: Which engine to use,"spark","hive"or"training". Defaults toNone, which initializes the engine to Spark if the environment provides Spark, for example on Hopsworks and Databricks, or falls back on Hive if Spark is not available, e.g. on local Python environments or AWS SageMaker. This option allows you to override this behaviour."training"engine is useful when only feature store metadata is needed, for example training dataset location and label information when Hopsworks training experiment is conducted. - region_name

str: The name of the AWS region in which the required secrets are stored, defaults to"default". - secrets_store

str: The secrets storage to be used, either"secretsmanager","parameterstore"or"local", defaults to"parameterstore". - hostname_verification

bool: Whether or not to verify Hopsworks’ certificate, defaults toTrue. - trust_store_path

Optional[str]: Path on the file system containing the Hopsworks certificates, defaults toNone. - cert_folder

str: The directory to store retrieved HopsFS certificates, defaults to"hops". Only required when running without a Spark environment. - api_key_file

Optional[str]: Path to a file containing the API Key, if provided,secrets_storewill be ignored, defaults toNone. - api_key_value

Optional[str]: API Key as string, if provided,secrets_storewill be ignored, however, this should be used with care, especially if the used notebook or job script is accessible by multiple parties. Defaults toNone`.

Returns

Connection. Feature Store connection handle to perform operations on a

Hopsworks project.

Methods#

close#

Connection.close()

Close a connection gracefully.

This will clean up any materialized certificates on the local file system of external environments such as AWS SageMaker.

Usage is recommended but optional.

connect#

Connection.connect()

Instantiate the connection.

Creating a Connection object implicitly calls this method for you to

instantiate the connection. However, it is possible to close the connection

gracefully with the close() method, in order to clean up materialized

certificates. This might be desired when working on external environments such

as AWS SageMaker. Subsequently you can call connect() again to reopen the

connection.

Example

import hsfs

conn = hsfs.connection()

conn.close()

conn.connect()

get_feature_store#

Connection.get_feature_store(name=None)

Get a reference to a feature store to perform operations on.

Defaulting to the project name of default feature store. To get a Shared feature stores, the project name of the feature store is required.

Arguments

- name

str: The name of the feature store, defaults toNone.

Returns

FeatureStore. A feature store handle object to perform operations on.

get_rule#

Connection.get_rule(name)

Get a rule with a certain name or all rules available for data validation.

get_rules#

Connection.get_rules()

Get a rule with a certain name or all rules available for data validation.

setup_databricks#

Connection.setup_databricks(

host=None,

port=443,

project=None,

engine=None,

region_name="default",

secrets_store="parameterstore",

hostname_verification=True,

trust_store_path=None,

cert_folder="hops",

api_key_file=None,

api_key_value=None,

)

Set up the HopsFS and Hive connector on a Databricks cluster.

This method will setup the HopsFS and Hive connectors to connect from a

Databricks cluster to a Hopsworks Feature Store instance. It returns a

Connection object and will print instructions on how to finalize the setup

of the Databricks cluster.

See also the Databricks integration guide.