Azure Machine Learning Designer Integration#

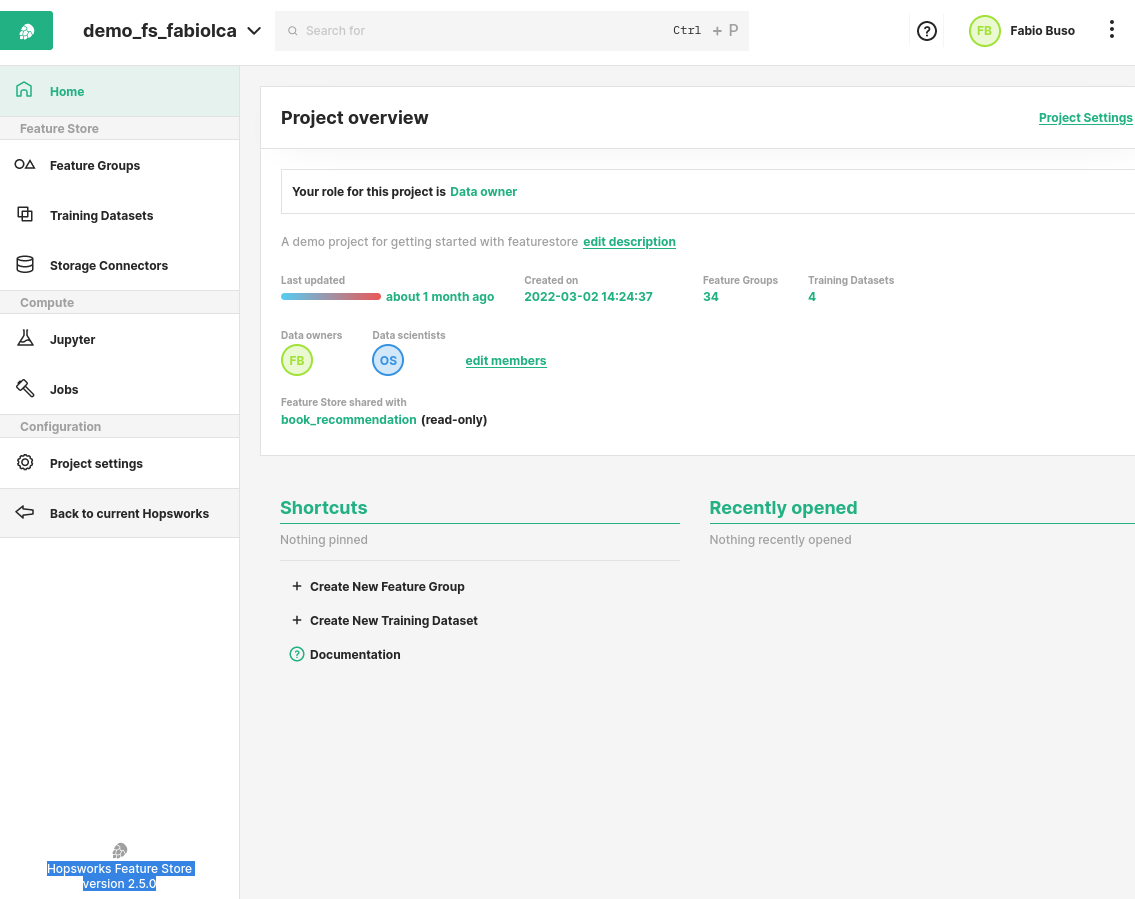

Connecting to the Feature Store from the Azure Machine Learning Designer requires setting up a Feature Store API key for the Designer and installing the HSFS on the Designer. This guide explains step by step how to connect to the Feature Store from Azure Machine Learning Designer.

Network Connectivity

To be able to connect to the Feature Store, please ensure that the Network Security Group of your Hopsworks instance on Azure is configured to allow incoming traffic from your compute target on ports 443, 9083 and 9085 (443,9083,9085). See Network security groups for more information. If your compute target is not in the same VNet as your Hopsworks instance and the Hopsworks instance is not accessible from the internet then you will need to configure Virtual Network Peering.

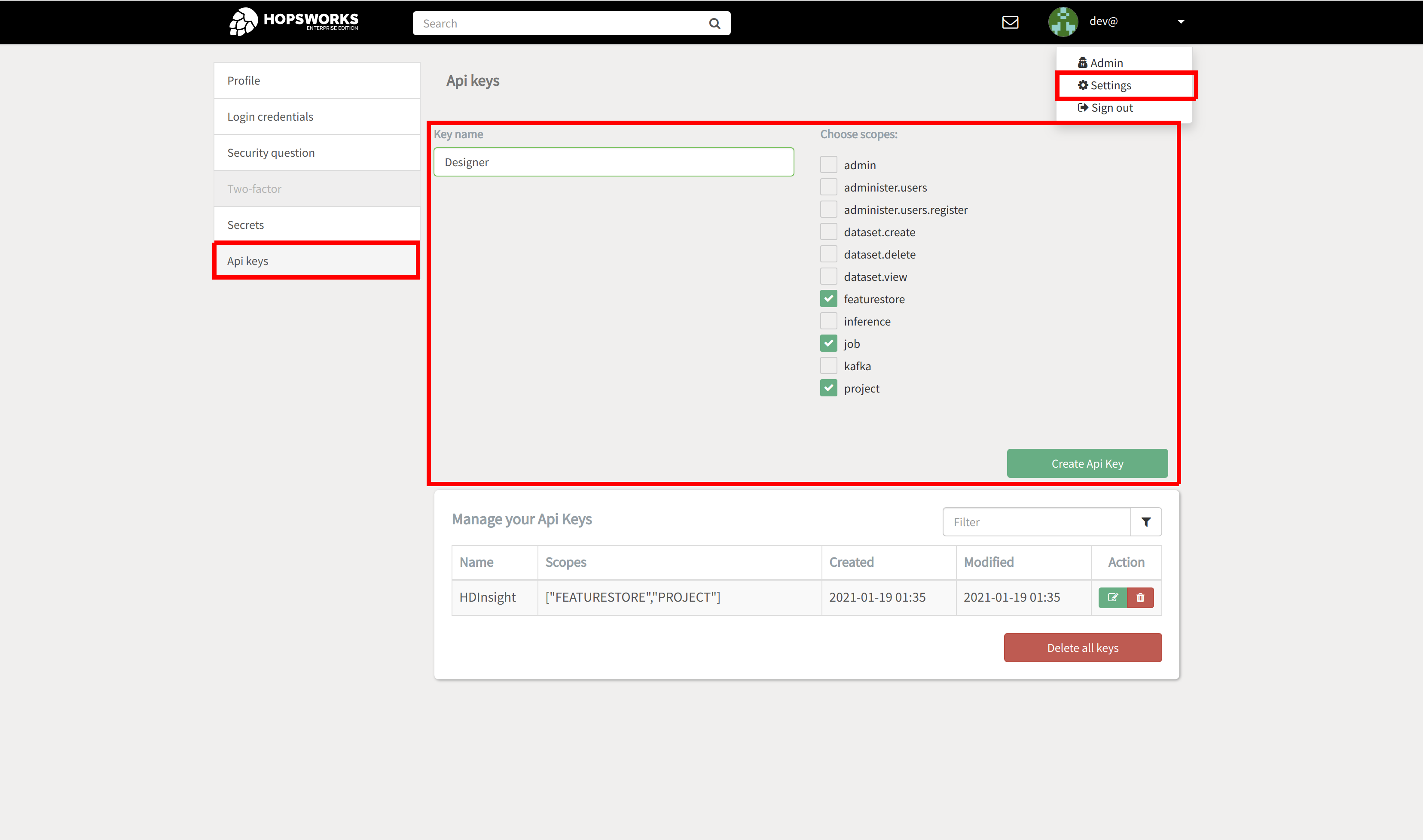

Generate an API key#

In Hopsworks, click on your username in the top-right corner and select Settings to open the user settings. Select API keys. Give the key a name and select the job, featurestore and project scopes before creating the key. Copy the key into your clipboard for the next step.

Scopes

The API key should contain at least the following scopes:

- featurestore

- project

- job

Info

You are only ably to retrieve the API key once. If you did not manage to copy it to your clipboard, delete it again and create a new one.

Connect to the Feature Store#

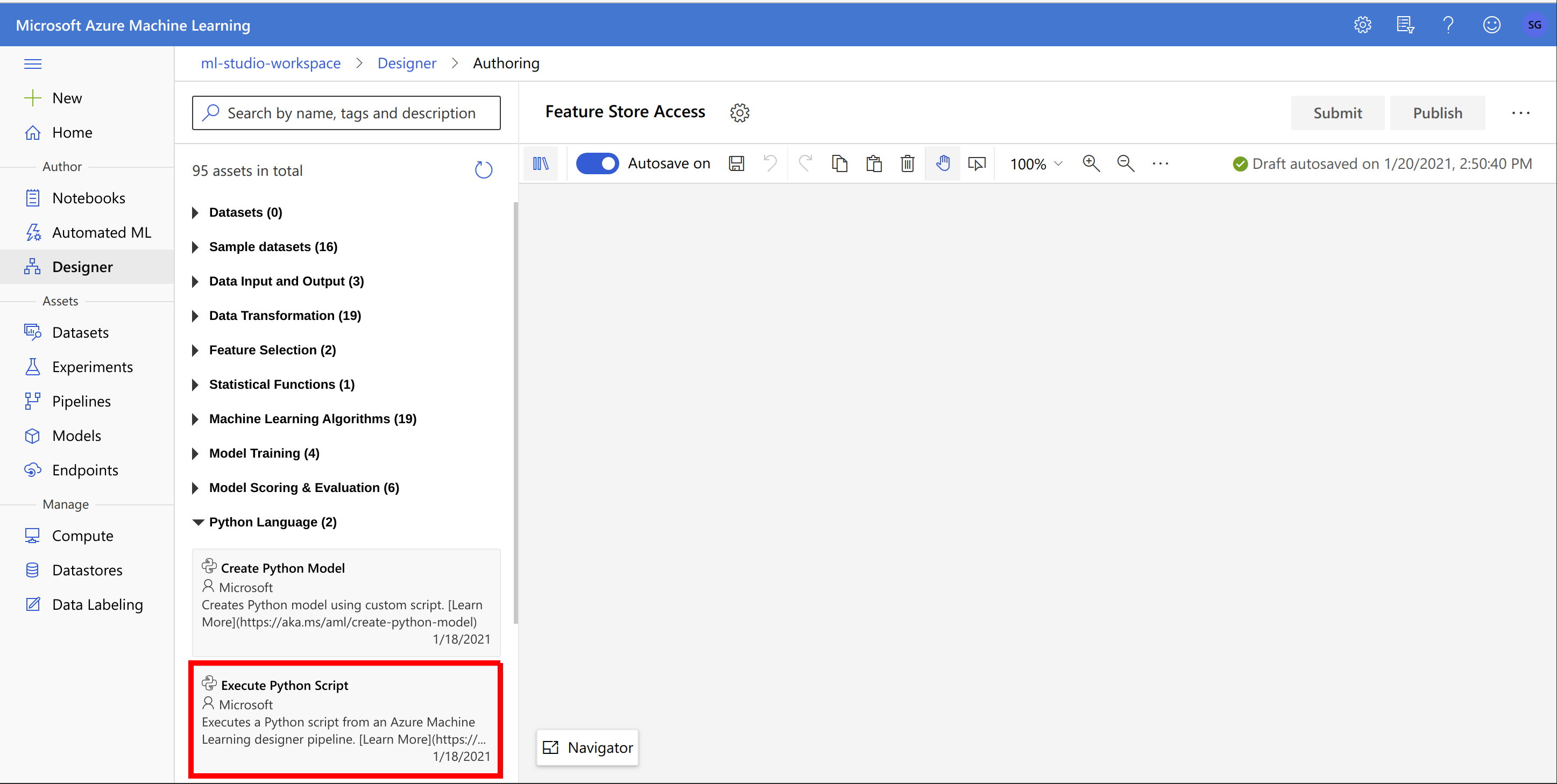

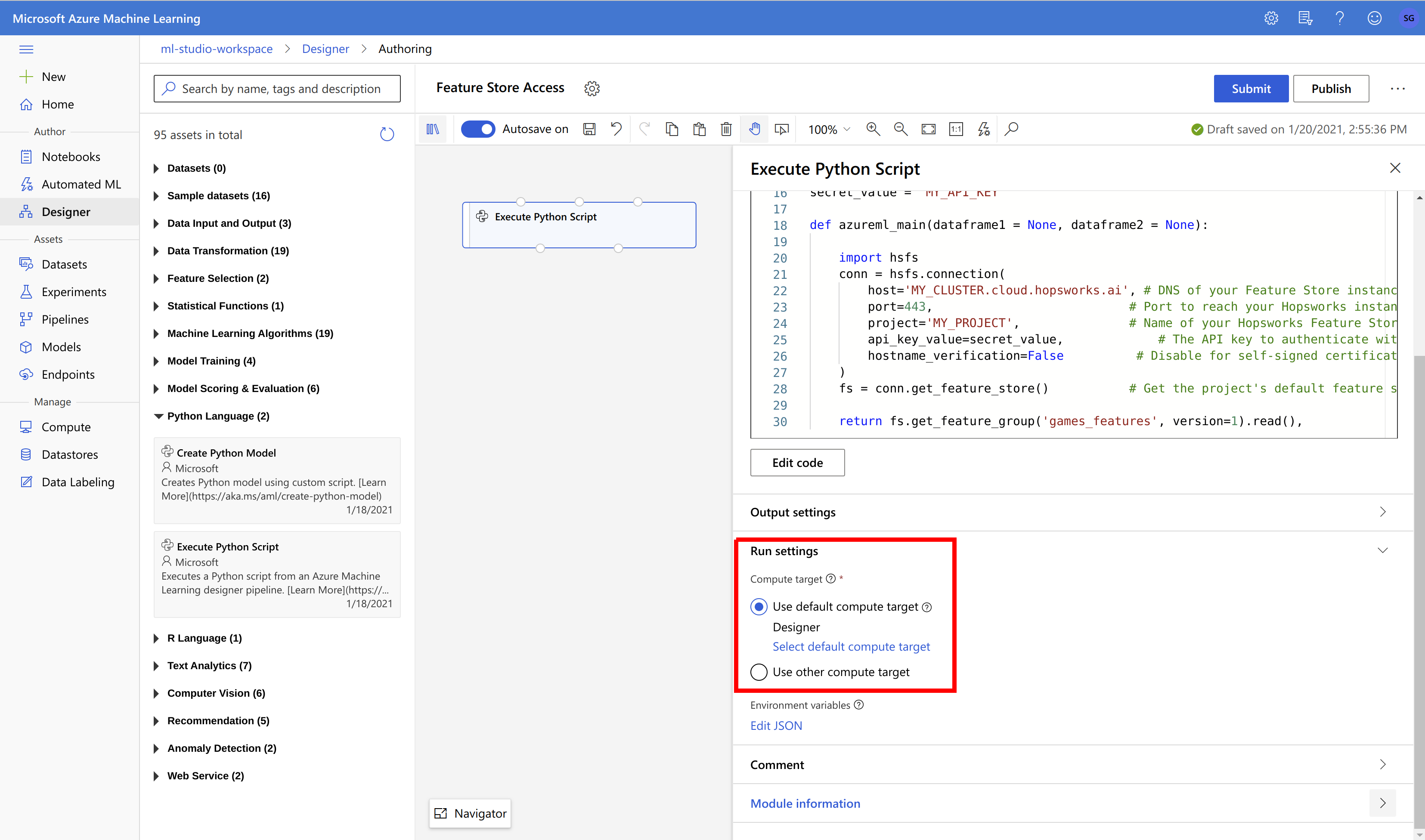

To connect to the Feature Store from the Azure Machine Learning Designer, create a new pipeline or open an existing one:

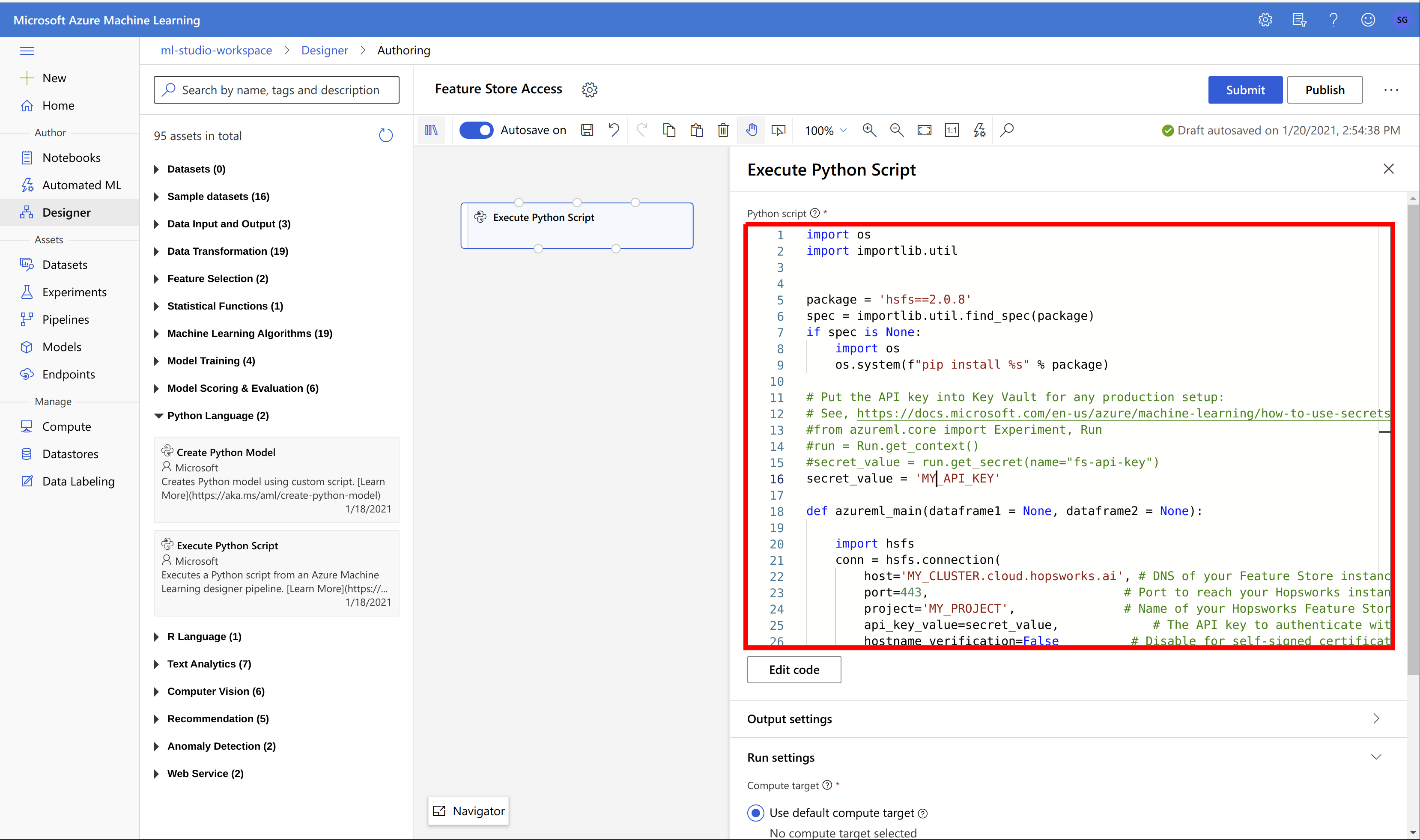

In the pipeline, add a new Execute Python Script step and replace the Python script from the next step:

Updating the script

Replace MY_VERSION, MY_API_KEY, MY_INSTANCE, MY_PROJECT and MY_FEATURE_GROUP with the respective values. The major version set for MY_VERSION needs to match the major version of Hopsworks. Check PyPI for available releases.

import os

import importlib.util

package_name = 'hsfs'

version = 'MY_VERSION'

spec = importlib.util.find_spec(package_name)

if spec is None:

import os

os.system(f"pip install %s[hive]==%s" % (package_name, version))

# Put the API key into Key Vault for any production setup:

# See, https://docs.microsoft.com/en-us/azure/machine-learning/how-to-use-secrets-in-runs

#from azureml.core import Experiment, Run

#run = Run.get_context()

#secret_value = run.get_secret(name="fs-api-key")

secret_value = 'MY_API_KEY'

def azureml_main(dataframe1 = None, dataframe2 = None):

import hsfs

conn = hsfs.connection(

host='MY_INSTANCE.cloud.hopsworks.ai', # DNS of your Feature Store instance

port=443, # Port to reach your Hopsworks instance, defaults to 443

project='MY_PROJECT', # Name of your Hopsworks Feature Store project

api_key_value=secret_value, # The API key to authenticate with Hopsworks

hostname_verification=True, # Disable for self-signed certificates

engine='hive' # Choose Hive as engine

)

fs = conn.get_feature_store() # Get the project's default feature store

return fs.get_feature_group('MY_FEATURE_GROUP', version=1).read(),

Select a compute target and save the step. The step is now ready to use:

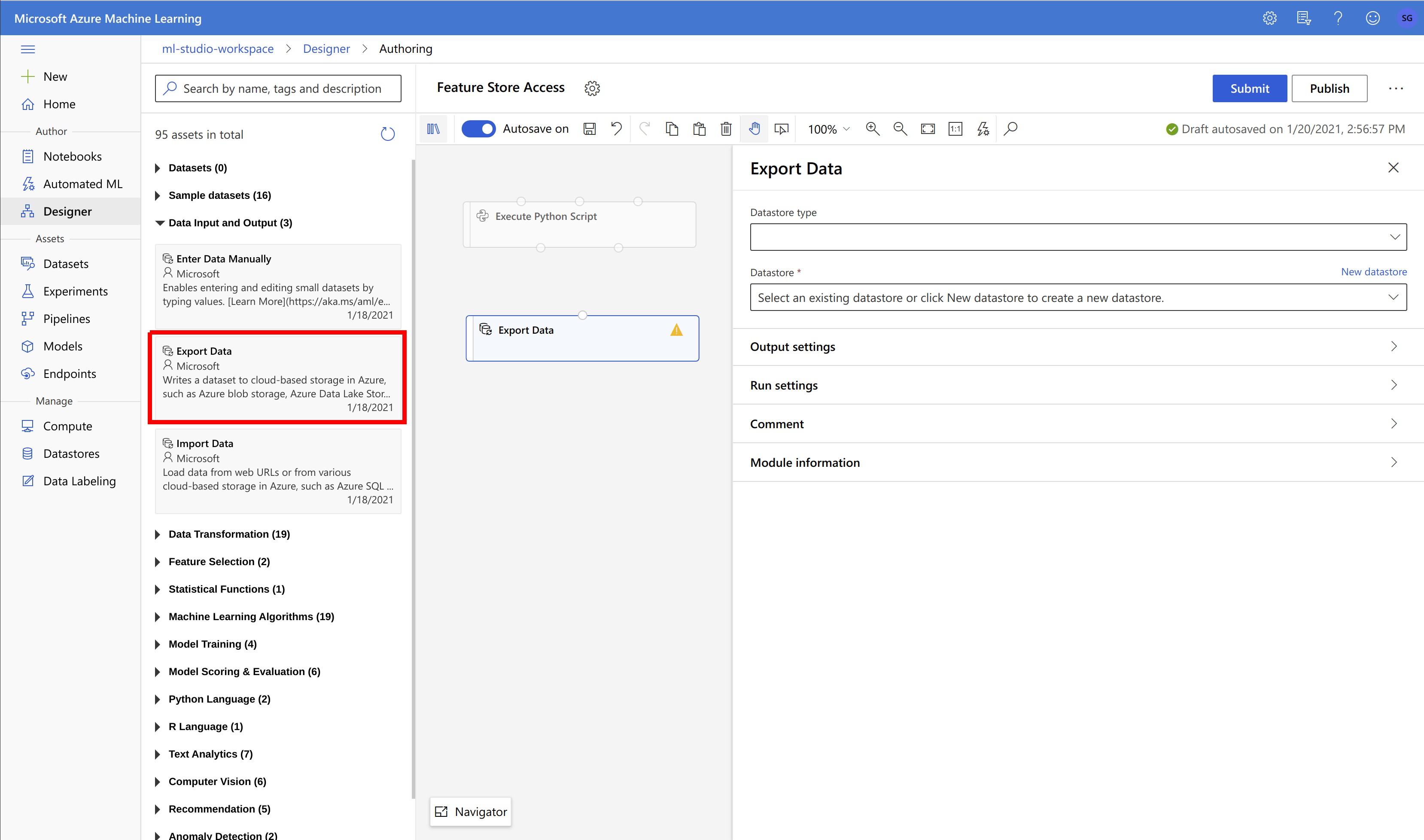

As a next step, you have to connect the previously created Execute Python Script step with the next step in the pipeline. For instance, to export the features to a CSV file, create a Export Data step:

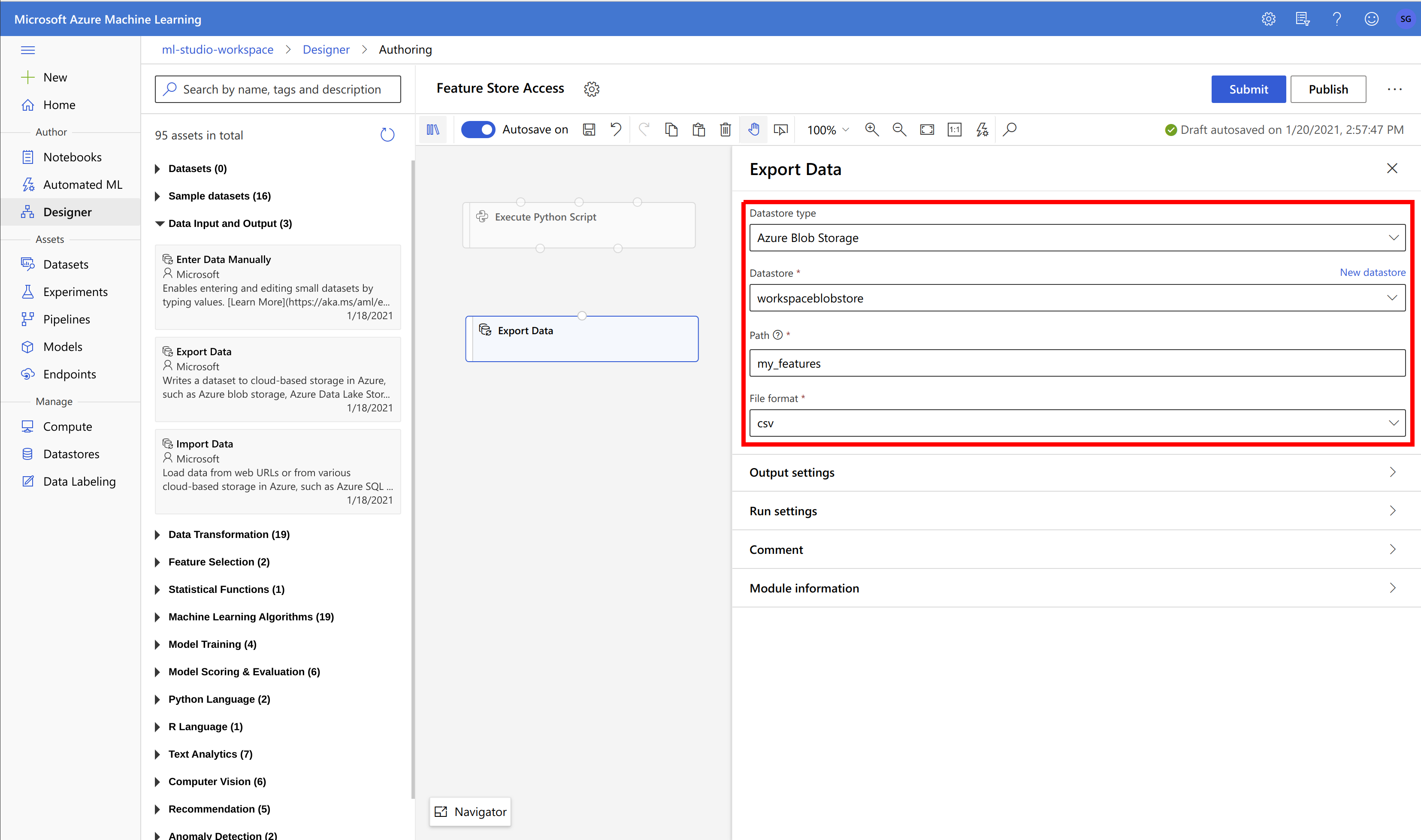

Configure the Export Data step to write to you data store of choice:

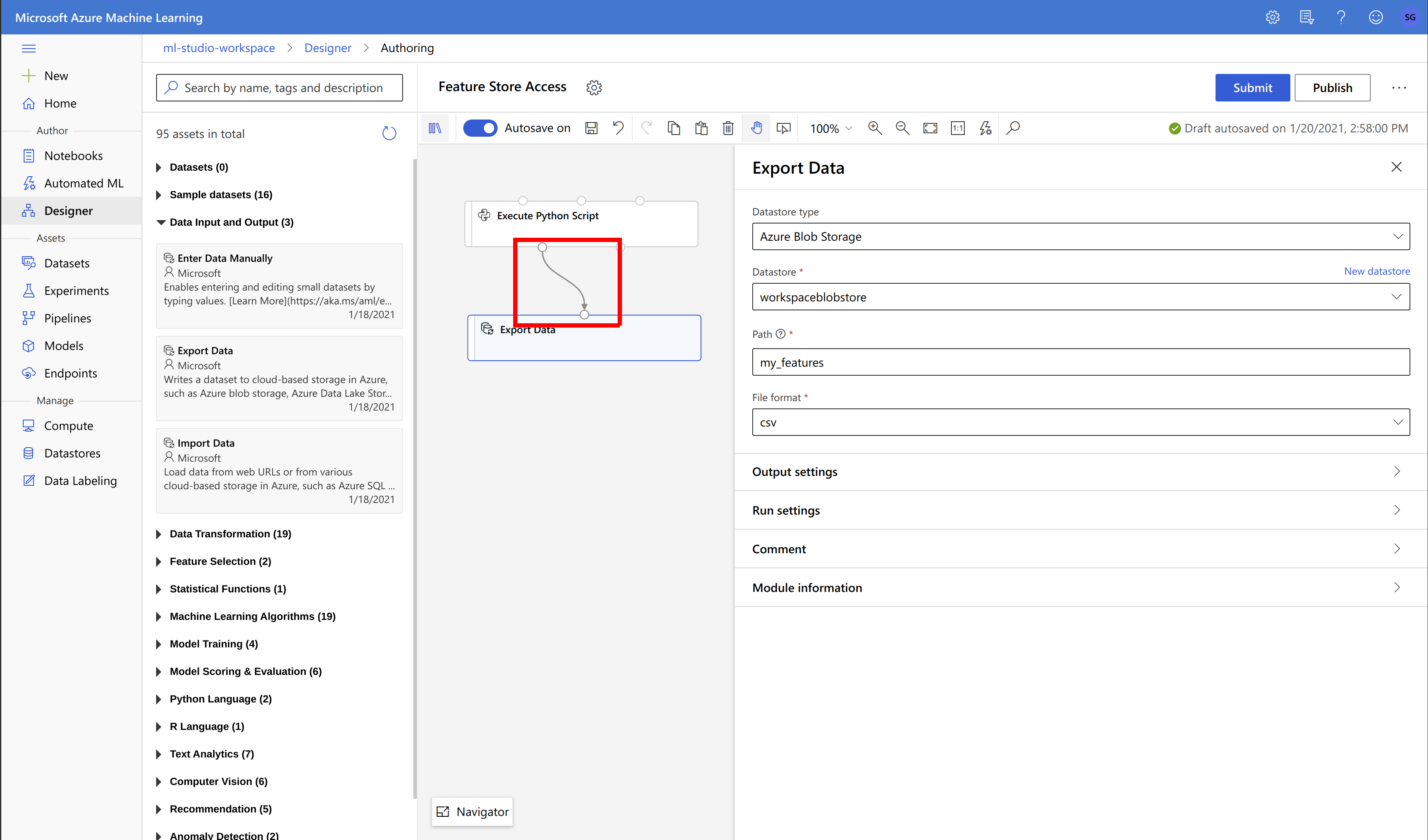

Connect the to steps by drawing a line between them:

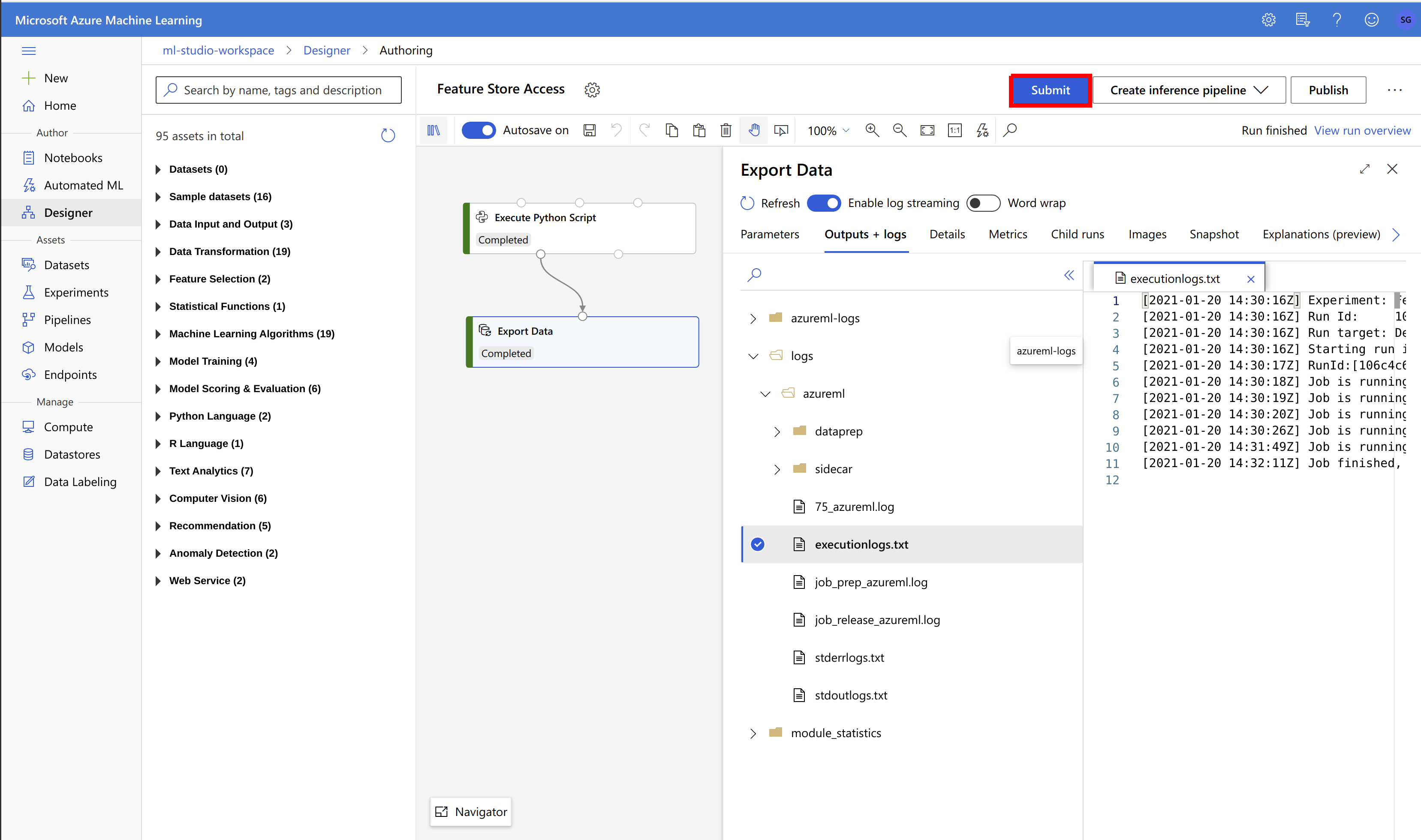

Finally, submit the pipeline and wait for it to finish:

Performance on the first execution

The Execute Python Script step can be slow when being executed for the first time as the HSFS library needs to be installed on the compute target. Subsequent executions on the same compute target should use the already installed library.

Next Steps#

For more information about how to use the Feature Store, see the Quickstart Guide.