Storage Connector Guides#

You can define storage connectors in Hopsworks for batch and streaming data sources. Storage connectors securely store the authentication information about how to connect to an external data store. They can be used from programs within Hopsworks or externally.

There are three main use cases for Storage Connectors:

- Simply use it to read data from the storage into a dataframe.

- External (on-demand) Feature Groups can be defined with storage connectors as data source. This way, Hopsworks stores only the metadata about the features, but does not keep a copy of the data itself. This is also called the Connector API.

- Write training data to an external storage system to make it accessible by third parties.

Storage connectors provide two main mechanisms for authentication: using credentials or an authentication role (IAM Role on AWS or Managed Identity on Azure). Hopsworks supports both a single IAM role (AWS) or Managed Identity (Azure) for the whole Hopsworks cluster or multiple IAM roles (AWS) or Managed Identities (Azure) that can only be assumed by users with a specific role in a specific project.

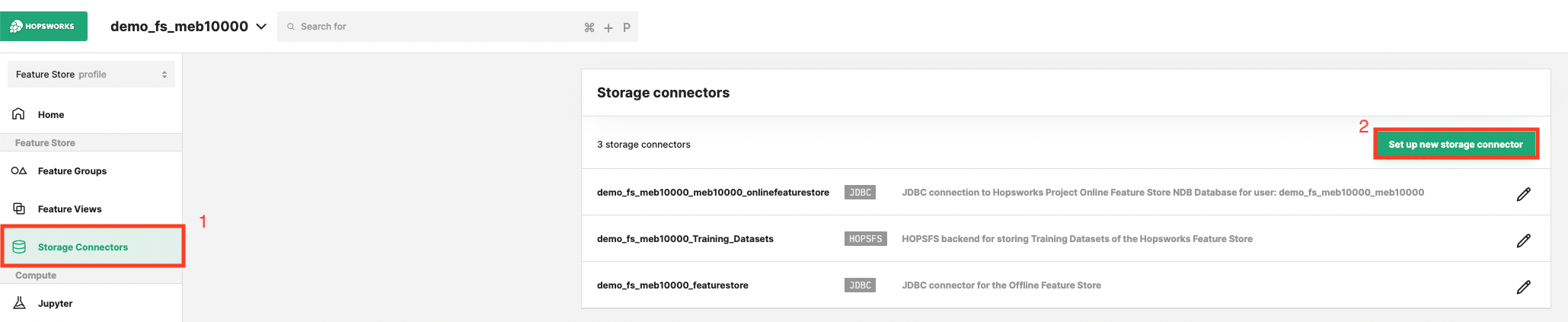

By default, each project is created with three default Storage Connectors: A JDBC connector to the online feature store, a HopsFS connector to the Training Datasets directory of the project and a JDBC connector to the offline feature store.

Cloud Agnostic#

Cloud agnostic storage systems:

- JDBC: Connect to JDBC compatible databases and query them using SQL.

- Snowflake: Query Snowflake databases and tables using SQL.

- Kafka: Read data from a Kafka cluster into a Spark Structured Streaming Dataframe.

- HopsFS: Easily connect and read from directories of Hopsworks' internal File System.

AWS#

For AWS the following storage systems are supported:

- S3: Read data from a variety of file based storage in S3 such as parquet or CSV.

- Redshift: Query Redshift databases and tables using SQL.

Azure#

For AWS the following storage systems are supported:

- ADLS: Read data from a variety of file based storage in ADLS such as parquet or CSV.

GCP#

For GCP the following storage systems are supported:

- BigQuery: Query BigQuery databases and tables using SQL.

- GCS: Read data from a variety of file based storage in Google Cloud Storage such as parquet or CSV.

Next Steps#

Move on to the Configuration and Creation Guides to learn how to set up a storage connector.